Difference between revisions of "Knowledge"

GerdIsenberg (talk | contribs) |

GerdIsenberg (talk | contribs) |

||

| Line 45: | Line 45: | ||

I am not saying search is the only reason but that search is more significant in the Elo jump than other factors. Thanks for the interesting history which in general I agree with but I should add for clarification... | I am not saying search is the only reason but that search is more significant in the Elo jump than other factors. Thanks for the interesting history which in general I agree with but I should add for clarification... | ||

| − | In 1989 I was exposed to the [[Null Move|null move]] in [[ | + | In 1989 I was exposed to the [[Null Move|null move]] in [[HIARCS|HIARCS']] first official computer tournament at the [[1st Computer Olympiad|Olympiad]] in London. [[John Hamlen]] had written his program [[Woodpusher]] as part of his M.Sc project investigating the null move exclamation and although Woodpusher did not do well in that tournament I had the good fortune to discuss null move with John and reading his project which interested me very much. John and I had many discussions on computer chess over the following months. |

That Olympiad probably had an impact on you too and not only because [[Rebel]] won Gold above [[Mephisto Portorose|Mephisto X]] and [[Fidelity|Fidelity X]] for the first time but because there was a program there running quite fast (at that time in history) called [[E6P]] (so named because it could reach 6 ply full width!) running on an Acorn [[ARM2|ARM]] [[Acorn Archimedes|based machine]]. [[Jan Louwman|Jan]] was operating Rebel at the tournament as you know and I noticed Jan take a keen interest in this new machine. | That Olympiad probably had an impact on you too and not only because [[Rebel]] won Gold above [[Mephisto Portorose|Mephisto X]] and [[Fidelity|Fidelity X]] for the first time but because there was a program there running quite fast (at that time in history) called [[E6P]] (so named because it could reach 6 ply full width!) running on an Acorn [[ARM2|ARM]] [[Acorn Archimedes|based machine]]. [[Jan Louwman|Jan]] was operating Rebel at the tournament as you know and I noticed Jan take a keen interest in this new machine. | ||

Revision as of 07:40, 30 June 2018

Home * Knowledge

Knowledge is the possession of information, its acquisition involves complex cognitive processes: perception, learning, communication, association and reasoning.

Inside a chess program, knowledge is manifested either as procedural or as declarative knowledge. The declarative a priori knowledge about the rules of chess is immanent inside the move generator in conjunction with check detection. Further declarative knowledge is coded as rules of thumb of the evaluation function, as well as persistent perfect knowledge from retrograde analysis, or as empirical knowledge mostly from human experience, to retrieve moves from an hand crafted opening book. Procedural knowledge is applied by Learning, as well to backup declarative knowledge by Search. However, the Search versus Knowledge trade-off in computer chess and games refers heuristic or perfect knowledge.

Contents

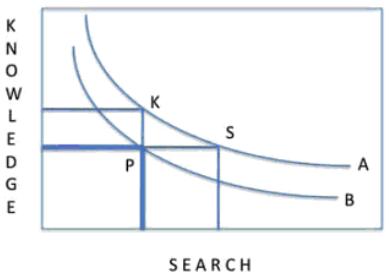

Search versus Knowledge

The trade-offs of search versus Knowledge [2]

| Curves A and B represent a fixed performance level, say 2000 for B and 2200 for A. The curves indicate that there are many ways to archive that level of performance, either by little knowledge and lot of search, or vice versa. If a program has archived point P on curve B, it may improve between search only (point S) or knowledge only (point K). |

In Practice

Andreas Junghanns and Jonathan Schaeffer in Search versus knowledge in game-playing programs revisited [3]:

The difficulty lies in quantifying the knowledge axis. Perfect knowledge assumes an oracle, which for most games we do not have. However, we can approximate an oracle by using a high-quality, game playing program that performs deep searches. Although not perfect, it is the best approximation available. Using this, how can we measure the quality of knowledge in the program?

A heuristic evaluation function, as judged by an oracle, can be viewed as a combination of two things: oracle knowledge and noise. The oracle knowledge is beneficial and improves the program' s play. The noise, on the other hand, represents the inaccuracies in the program' s knowledge. It can be introduced by several things, including knowledge that is missing, over- or under-valued, and/or irrelevant. As the noise level increase, the beneficial contribution of the knowledge is overshadowed.

By definition, an oracle has no noise. We can measure the quality of the heuristic evaluation in a program by the amount of noise that is added into it. To measure this, we add a random number to each leaf node evaluation ...

HiTech versus LoTech

At the Advances in Computer Chess 5 conference 1987, Hans Berliner et al. introduced an interesting experiment. HiTech with a sophisticated evaluation competed versus LoTech, almost the same program but a rudimentary evaluation, at different search depths [4]. According to Peter Kouwenhoven [5], Berliner's conclusion was that more was to be won by increasing HiTech's knowledge at constant speed rather than further increasing its speed while keeping knowledge constant. The abstract from the extended 1990 paper [6]:

Chess programs can differ in depth of search or in the evaluation function applied to leaf nodes or both. Over the past 10 years, the notion that the principal way to strengthen a chess program is to improve its depth of search has held sway. Improving depth of search undoubtedly does improve a program's strength. However, projections of potential gain have time and again been found to overestimate the actual gain.

We examine the notion that it is possible to project the playing strength of chess programs by having different versions of the same program (differing only in depth of search) play each other. Our data indicates that once a depth of “tactical sufficiency” is reached, a knowledgeable program can beat a significantly less knowledgeable one almost all of the time when both are searching to the same depth. This suggests that once a certain knowledge gap has been opened up, it cannot be overcome by small increments in searching depth. The conclusion from this work is that extending the depth of search without increasing the present level of knowledge will not in any foreseeable time lead to World Championship level chess. The approach of increasing knowledge has been taken in the HiTech chess machine.

Ed Schröder's Conclusion

Ed Schröder concluded HiTech's extra knowledge was just worth one ply in the computer-computer area [7][8], and further elaborated on tactical evaluation knowledge helpful in early Rebel searching 5-7 plies versus Rebel searching 13-15 plies on 2001 hardware [9] [10]:

Some specific chess knowledge through the years become out-dated due to the speed of nowadays computers. An example: In the early days of computer chess, say the period 1985-1989 I as hardware had a 6502 running at 5 Mhz. Rebel at that time could only search 5-7 plies on tournament time control. Such a low depth guarantees you one thing: horizon effects all over, thus losing the game.

To escape from the horizon effect all kind of tricks were invented, chess knowledge about dangerous pins, knight forks, double attacks, overloading of pieces and reward those aspects in eval. Complicated and processor time consuming software it was (15-20% less performance) but it did the trick escaping from the horizon effect in a reasonable way.

Today we run chess program on 1500 Mhz machines and instead of the 5-7 plies Rebel now gets 13-15 plies in the middle game and the horizon effect which was a major problem at 5 Mhz slowly was fading away.

So I wondered, what if I throw that complicated "anti-horizon" code out of Rebel, is it still needed? So I tried and found out that Rebel played as good with the "anti-horizon" code as without the code. In other words, the net gain was a "free" speed gain of 15-20%, thus an improvement.

One aspect of chess programming is that your program is in a constant state of change due to the state of art of nowadays available hardware. I am sure a Rebel at 10 Ghz several parts of Rebel need a face-lift to get the maximum out of the new speed monster.

Search versus Evaluation

Mark Uniacke in a reply to Ed Schröder, on Search or Evaluation, mentioning the 1st Computer Olympiad, Null Move Pruning, HIARCS, Woodpusher, E6P, Rebel, Fritz ... [11]:

I am not saying search is the only reason but that search is more significant in the Elo jump than other factors. Thanks for the interesting history which in general I agree with but I should add for clarification...

In 1989 I was exposed to the null move in HIARCS' first official computer tournament at the Olympiad in London. John Hamlen had written his program Woodpusher as part of his M.Sc project investigating the null move exclamation and although Woodpusher did not do well in that tournament I had the good fortune to discuss null move with John and reading his project which interested me very much. John and I had many discussions on computer chess over the following months. That Olympiad probably had an impact on you too and not only because Rebel won Gold above Mephisto X and Fidelity X for the first time but because there was a program there running quite fast (at that time in history) called E6P (so named because it could reach 6 ply full width!) running on an Acorn ARM based machine. Jan was operating Rebel at the tournament as you know and I noticed Jan take a keen interest in this new machine.

Frans was using null move in Fritz 2 in Madrid 1992 (I am not sure about Fritz 1). I am sure you remember that tournament fondly. I remember at Madrid having a discussion with a few programmers including Richard about what on earth was Fritz 2 doing to reach the depths it was attaining, this seed of interest made me investigate search enhancements carefully after Madrid and it was then that I began re-investigating the null move. It was not long before HIARCS began using null moves too and by Christmas 1992 I had a working implementation which gave a big jump over Hiarcs 1 which had only been released shortly before. I had much fun playing my Hiarcs 1.n against Mephisto Risc 1 in late 1992, early 1993 and that sort of closes the circle on the E6P story. Even so, the null move idea still evolved and my implementation today is much better than it was initially and so it can be for other ideas. We can all profit from these techniques but some gain more than others if they find new ways to exploit it. Rebel 7/8/9 and Hiarcs 5/6/7 battled hard in those years and my big jump to the top of the rating lists happened partly because of a search change to Hiarcs 5/6 which led at one stage to a 71 Elo lead on the SSDF. I recall Rebel and Hiarcs exchanging the lead on the SSDF list a number of times in those years. So we agree the impact search has made on computer chess progress is clear. Now we diverge on what might be the reason for new progress in the field. Clearly we have good search enhancements on cut nodes and all nodes, but that does not mean they cannot be improved and enhanced or in fact that another technique might prove to be superior or complementary. Meanwhile there can be no doubt that progress is made positionally irrespective of search but I do not believe it can be the reason for a breakthrough jump in chess strength but rather a steady climb. But wait! Maybe there is a middle way, a third avenue of improvement which sort of falls between the two pillars of search and evaluation and that is search intelligence. Something both of us have practiced in our programs for years but maybe not properly exploited. The ability of the eval to have a more significant impact on the search than traditionally has been the case. I think this area has been relatively unexplored and offers interesting potential.

Knowing

Declarative Knowledge

A Priori

Heuristic Knowledge

Empirical Knowledge

Perfect Knowledge

Perfect Knowledge usually incorporates analysis or stored results from exhaustive search, which requires no further search at interior nodes except the root. An oracle might be considered as "perfect" evaluation.

Interior Node Recognizer

Endgame Databases

as probed inside a Recognizer framework, if a certain material constellation is detected.

Procedural Knowledge

See also

- Artificial Intelligence

- Diminishing Returns

- Memory

- Opponent Model Search

- Psychology

- Strategy

- Tactics

Publications

1970 ...

- Marvin Minsky (1974). Framework for Representing Knowledge 1974. MIT-AI Laboratory Memo 306

- Coen Zuidema (1974). Chess: How to Program the Exceptions? Technical Report IW21/74, Mathematical Center Amdsterdam. pdf

1975 ...

- Donald Michie (1976). An Advice-Taking System for Computer Chess. Computer Bulletin, Ser. 2, Vol. 10, pp. 12-14. ISSN 0010-4531.

- Jack Good (1977). Dynamic Probability, Computer Chess, and the Measurement of Knowledge. Machine Intelligence, Vol. 8 (eds. E. Elcock and Donald Michie), pp. 139-150. Ellis Horwood, Chichester.

- Ivan Bratko, Danny Kopec, Donald Michie (1978). Pattern-Based Representation of Chess Endgame Knowledge. The Computer Journal, Vol. 21, No. 2, pp. 149-153. pdf

- Donald Michie, Ivan Bratko (1978). Advice Table Representations of Chess End-Game Knowledge. Proceedings 3rd AISB/GI Conference, pp. 194-200.

- David Wilkins (1979). Using Patterns and Plans to Solve Problems and Control Search. Ph.D. thesis, Computer Science Dept, Stanford University, AI Lab Memo AIM-329

- Max Bramer, Mike Clarke (1979). A Model for the Representation of Pattern-Knowledge for the Endgame in Chess. International Journal of Man-Machine Studies, Vol. 11, No.5

1980 ...

- Ivan Bratko, Donald Michie (1980). A Representation of Pattern-Knowledge in Chess Endgames. Advances in Computer Chess 2

- David Wilkins (1980). Using patterns and plans in chess. Artificial Intelligence, vol. 14, pp. 165-203. Reprinted (1988) in Computer Chess Compendium

- David Wilkins (1982). Using Knowledge to Control Tree Searching. Artificial Intelligence, vol. 18, pp. 1-51.

- Alen Shapiro, Tim Niblett (1982). Automatic Induction of Classification Rules for Chess End game. Advances in Computer Chess 3

- Hans Berliner (1982). Search vs. knowledge: an analysis from the domain of games. Technical Report Department of Computer Science, Carnegie Mellon University

- Allen Newell (1982). The Knowledge Level. Artificial Intelligence, Vol. 18, No. 1

- Raymond Smullyan (1982). An Epistemological Nightmare. MIT

- David Wilkins (1983). Using chess knowledge to reduce search. In Chess Skill in Man and Machine (Peter W. Frey, ed.), Ch. 10, 2nd Edition, Springer-Verlag.

- Danny Kopec (1983). Human and Machine Representations of Knowledge. Ph.D. thesis, University of Edinburgh, supervisor: Donald Michie

- Alen Shapiro (1983). The Role of Structured Induction in Expert Systems. University of Edinburgh, Machine Intelligence Research Unit (Ph.D. thesis) [12]

- Jonathan Schaeffer (1984). The Relative Importance of Knowledge. ICCA Journal, Vol. 7, No. 3

- Albrecht Heeffer (1984). Automated Acquisition on Concepts for the Description of Middle-game Positions in Chess. Turing Institute, Glasgow, Scotland, TIRM-84-005

1985 ...

- Albrecht Heeffer (1985). Validating Concepts from Automated Acquisition Systems. IJCAI 85, pdf

- Reiner Seidel (1985). Grammatical Description of Chess Positions, Data-Base versus Human Knowledge. ICCA Journal, Vol. 8, No. 3

- Jonathan Schaeffer, Tony Marsland (1985). The Utility of Expert Knowledge. Proceedings IJCAI 85, pp. 585-587. Los Angeles.

- Alen Shapiro, Donald Michie (1986). A Self-commenting Facility for Inductively Synthesised Endgame Expertise. Advances in Computer Chess 4

- Bernd Owsnicki, Kai von Luck (1986). N.N.: A Case Study in Chess Knowledge Representation. Advances in Computer Chess 4

- Jonathan Schaeffer (1986). Experiments in Search and Knowledge. Ph.D. Thesis, University of Waterloo. Reprinted as Technical Report TR 86-12, Department of Computing Science, University of Alberta, Edmonton, Alberta.

- Peter W. Frey (1986). Fuzzy Production Rules in Chess. ICCA Journal, Vol. 9, No. 4

- Peter W. Frey (1986). A Bit-Mapped Classifier. BYTE, Vol. 11, No. 12, pp. 161-172.

- Subhash Kak (1987). Patanjali and Cognitive Science. Vitasta Publishing [13]

- Alen Shapiro (1987). Structured Induction in Expert Systems. Turing Institute Press in association with Addison-Wesley Publishing Company, Workingham, UK. ISBN 0-201-178133. amazon[14]

- Stephen Muggleton (1988). Inductive Acquisition of Chess Strategies. Machine Intelligence 11 (eds. Jean Hayes Michie, Donald Michie, and J. Richards), pp. 375-389. Clarendon Press, Oxford, U.K. ISBN 0-19-853718-2.

- Reiner Seidel(1989). A Model of Chess Knowledge - Planning Structures and Constituent Analysis. Advances in Computer Chess 5

- Hans Berliner, Carl Ebeling (1989). Pattern Knowledge and Search: The SUPREM Architecture. Artificial Intelligence, Vol. 38, No. 2, pp. 161-198. ISSN 0004-3702.

- Revised as Hans Berliner, Carl Ebeling (1990). Hitech. Computers, Chess, and Cognition

- Hans Berliner, Gordon Goetsch, Murray Campbell, Carl Ebeling (1989). Measuring the Performance Potential of Chess Programs, Advances in Computer Chess 5

- Hermann Kaindl (1989). Towards a Theory of Knowledge. Advances in Computer Chess 5

1990 ...

- Hans Berliner, Gordon Goetsch, Murray Campbell, Carl Ebeling (1990). Measuring the Performance Potential of Chess Programs. Artificial Intelligence, Vol. 43, No. 1

- Kiyoshi Shirayanagi (1990). Knowledge Representation and its Refinement in Go Programs. Computers, Chess, and Cognition

- Robert Levinson, Feng-hsiung Hsu, Tony Marsland, Jonathan Schaeffer, David Wilkins (1991). The Role of Chess in Artificial Intelligence Research. IJCAI 1991, pdf, also in ICCA Journal, Vol. 14, No. 3, pdf

- Christian Posthoff, Michael Schlosser, Jens Zeidler (1993). Search vs. Knowledge? - Search and Knowledge! In: Proc. 3rd KADS Meeting, Munich, March 8-9 1993, Siemens AG, Corporate Research and Development, 305-326, 1993.

- Steven Walczak, Douglas D. Dankel II (1993). Acquiring Tactical and Strategic Knowledge with a Generalized Method for Chunking of Game Pieces. International Journal of Intelligent Systems 8 (2), 249-270.

- Christian Posthoff, Michael Schlosser, Rainer Staudte, Jens Zeidler (1994). Transformations of Knowledge. Advances in Computer Chess 7

- Robert Levinson, Gil Fuchs (1994). A Pattern-Weight Formulation of Search Knowledge. UCSC-CRL-94-10, CiteSeerX

1995 ...

- Richard Fikes (1996). Ontologies: What Are They, and Where's The Research? KR 1996

- Andreas Junghanns, Jonathan Schaeffer (1997). Search versus knowledge in game-playing programs revisited. IJCAI-97, Vol 1, pdf

- Johannes Fürnkranz (1997). Knowledge Discovery in Chess Databases: A Research Proposal. Technical Report OEFAI-TR-97-33, Austrian Research Institute for Artificial Intelligence, zipped ps, pdf [15]

- Krzysztof Krawiec, Roman Slowinski, Irmina Szczesniak (1998). Pedagogical Method for Extraction of Symbolic Knowledge from Neural Networks. Rough Sets and Current Trends in Computing 1998

- Edward J. Quigley, Anthony Debons (1999). Interrogative Theory of Information and Knowledge. SIGCPR '99, pdf

2000 ...

- Michael Thielscher (2000). Representing the Knowledge of a Robot. KR 2000, CiteSeerX

- Krzysztof Krawiec (2002). Genetic Programming-based Construction of Features for Machine Learning and Knowledge Discovery Tasks. Genetic Programming and Evolvable Machines, Vol. 3, No. 4

- Stuart Russell, Peter Norvig (2003). Artificial Intelligence: A Modern Approach, 2nd edition, 3rd edition 2009

- Stuart Russell (2003). Rationality and Intelligence. In Renee Elio (Ed.), Common sense, reasoning, and rationality, Oxford University Press, pdf

- Aleksander Sadikov, Ivan Bratko, Igor Kononenko (2003). Search versus Knowledge: An Empirical Study of Minimax on KRK. Advances in Computer Games 10, pdf

- Tristan Caulfield (2004). Acquiring and Using Knowledge in Computer Chess. BSc Computer Science, University of Bath, pdf

- Elias M. Awad, Hassan M. Ghaziri (2004,2010). Knowledge Management Systems. Pearson Education India, Knowledge Management Systems - Lecture Notes, LaTeX2HTML by Nikos Drakos, Ross Moore

- Nick Pelling (2004). The Network Is The Knowledge: Ideology & Strategy in Mintzberg's Ten Schools. MBA dissertation, Kingston University Business School, Surrey, UK

2005 ...

- Christian Posthoff, Michael Schlosser (2005). Optimal strategies — Learning from examples — Boolean equations. in Klaus P. Jantke, Steffen Lange (eds.) (2005). Algorithmic Learning for Knowledge-Based Systems, Lecture Notes in Computer Science 961, Springer

- Aleksander Sadikov, Ivan Bratko (2006). Search Versus Knowledge Revisited Again. CG 2006

- David J. Stracuzzi (2006). Scalable Knowledge Acquisition through Cumulative Learning and Memory Organization. Ph.D. thesis, University of Massachusetts Amherst, advisor Paul E. Utgoff, pdf

- Guillaume Chaslot, Louis Chatriot, Christophe Fiter, Sylvain Gelly, Jean-Baptiste Hoock, Julien Pérez, Arpad Rimmel, Olivier Teytaud (2008). Combining expert, offline, transient and online knowledge in Monte-Carlo exploration. pdf

- Guillaume Chaslot, Christophe Fiter, Jean-Baptiste Hoock, Arpad Rimmel, Olivier Teytaud (2009). Adding Expert Knowledge and Exploration in Monte-Carlo Tree Search. Advances in Computer Games 12, pdf, pdf

2010 ...

- Matej Guid (2010). Search and Knowledge for Human and Machine Problem Solving, Ph.D. thesis, University of Ljubljana, pdf [16]

- Shi-Jim Yen, Jung-Kuei Yang, Kuo-Yuan Kao, Tai-Ning Yang (2012). Bitboard Knowledge Base System and Elegant Search Architectures for Connect6. Knowledge-Based Systems, Vol. 34

- Bo-Nian Chen, Hung-Jui Chang, Shun-Chin Hsu, Jr-Chang Chen, Tsan-sheng Hsu (2013). Multilevel Inference in Chinese Chess Endgame Knowledge Bases. ICGA Journal, Vol. 36, No. 4 » Chinese Chess, Endgame Tablebases

- Bo-Nian Chen, Hung-Jui Chang, Shun-Chin Hsu, Jr-Chang Chen, Tsan-sheng Hsu (2014). Advanced Meta-knowledge for Chinese Chess Endgame Knowledge Bases. ICGA Journal, Vol 37, No. 1

2015 ...

- Tamal T. Biswas, Kenneth W. Regan (2015). Quantifying Depth and Complexity of Thinking and Knowledge. ICAART 2015, pdf

Forum Posts

1996 ...

- Incoporating chess knowledge in chess programs by Valavan Manohararajah, rgcc, June 26, 1996

- computer chess "oracle" ideas... by Robert Hyatt, rgcc, April 1, 1997 » Oracle

- Knowledge is not elegant by Don Dailey, CCC, June 14, 1998

- The end of computer chess progress? by Ed Schröder, rgcc, March 06, 1999

- The Limits of Positional Knowledge by Michael Neish from CCC, November 11, 1999

2000 ...

- suggestions for (a new generation of) chess knowledge using engines by Ingo Lindam, CCC, October 23, 2002

- likelihood instead of pawnunits? + chess knowledge by Ingo Lindam, CCC, October 25, 2002

- Knowledge by Sergei S. Markoff, CCC, September 26, 2004

2005 ...

- The superior Rybka chess knowledge by Chrilly Donninger, CCC, January 18, 2006 » Rybka

- knowledge and blitz; search and long games by Joseph Ciarrochi, CCC, February 15, 2006

- Search or Evaluation? by Ed Schröder, Hiarcs Forum, October 05, 2007 » Search, Evaluation

- Re: Search or Evaluation? by Mark Uniacke, Hiarcs Forum, October 14, 2007

2010 ...

- hardware advances - a different perspective by Robert Hyatt, CCC, September 09, 2010

- old crafty vs new crafty on new hardware by Robert Hyatt, CCC, September 11, 2010 » Crafty

- Crafty tests show that Software has advanced more by Don Dailey, CCC, September 12, 2010

- Final results - Crafty - hardware vs software by Robert Hyatt, CCC, September 13, 2010 » Crafty

- Deep Blue vs Rybka by Don Dailey, CCC, September 13, 2010 » Deep Blue, Rybka

- hardware doubling number for Crafty by Robert Hyatt, CCC, September 15, 2010 » Crafty

- Ahhh... the holy grail of computer chess by Marcel van Kervinck, CCC, August 23, 2011

- Is search irrelevant when computing ahead of very big trees? by Fermin Serrano, CCC, July 24, 2013 » Search

- Improve the search or the evaluation? by Jens Bæk Nielsen, CCC, August 31, 2013 » Search versus Evaluation

- Slow Searchers? by Michael Neish, CCC, November 02, 2013

- Chessprogams with the most chessknowing by Joe Boden, CCC, December 19, 2014

2015 ...

- An attempt to measure the knowledge of engines by Kai Laskos, CCC, March 23, 2015

- Re: Chessprogams with the most chessknowing by Vincent Lejeune, CCC, February 18, 2017

- Re: Chessprogams with the most chessknowing by Alvaro Cardoso, CCC, February 18, 2017

- Re: Chessprogams with the most chessknowing by Mark Lefler, CCC, February 18, 2017 » Komodo

- Re: Chessprogams with the most chessknowing by Marco Costalba, CCC, February 19, 2017 » Stockfish

External Links

Knowledge

- Knowledge from Wikipedia

- Knowledge acquisition from Wikipedia

- Knowledge discovery from Wikipedia

- Knowledge engineering from Wikipedia

- Knowledge Engineering Environment from Wikipedia

- Knowledge extraction from Wikipedia

- Knowledge management from Wikipedia

- Knowledge representation and reasoning from Wikipedia

- Knowledge transfer from Wikipedia

Related Topics

- Awareness from Wikipedia

- Belief from Wikipedia

- Cognition from Wikipedia

- Consciousness from Wikipedia

- Data mining from Wikipedia

- Epistemology from Wikipedia

- Experience from Wikipedia

- Expert from Wikipedia

- Expert system from Wikipedia

- Imagination from Wikipedia

- Instinct from Wikipedia

- Intelligence from Wikipedia

- Intuition (philosophy) from Wikipedia

- Intuition (psychology) from Wikipedia

- Learning from Wikipedia

- Mind from Wikipedia

- Ontology (information science) from Wikipedia

- Paradigm from Wikipedia

- Philosophy from Wikipedia

- Philosophy of mind from Wikipedia

- Psychology from Wikipedia

- Science from Wikipedia

- Scientia potentia est - knowledge is power - Wikipedia

- Skill from Wikipedia

- Thought from Wikipedia

- Understanding from Wikipedia

Types of Knowledge

- A priori and a posteriori from Wikipedia

- Common knowledge from Wikipedia

- Common knowledge (logic) from Wikipedia

- Declarative Knowledge from Decision Automation Resources

- Descriptive knowledge from Wikipedia

- Dispersed knowledge from Wikipedia

- Distributed knowledge from Wikipedia

- Metaknowledge from Wikipedia

- Mutual knowledge (logic) from Wikipedia

- Procedural knowledge from Wikipedia

- Teaching Declarative Knowledge by Renay M. Scott

Musicvideo

- Mahavishnu Orchestra - You Know You Know, August 17, 1972, more recent 3Sat broadcast [17], YouTube Video

References

- ↑ Chess in the Art of Samuel Bak, Center for Holocaust & Genocide Studies, University of Minnesota

- ↑ Jonathan Schaeffer (1997). One Jump Ahead Challenging Human Supremacy in Checkers, Springer, ISBN 0-387-94930-5, Prelude to Disaster, pp. 255-256 or pp. 249-250 in the Second Edition, 2009

- ↑ Andreas Junghanns, Jonathan Schaeffer (1997). Search versus knowledge in game-playing programs revisited. IJCAI-97, Vol 1, pdf, 3 Search Versus Knowledge: Practise

- ↑ Hans Berliner, Gordon Goetsch, Murray Campbell, Carl Ebeling (1989). Measuring the Performance Potential of Chess Programs, Advances in Computer Chess 5

- ↑ Peter Kouwenhoven (1987). Advances in Computer Chess, The 5th Triennial Conference. ICCA Journal, Vol. 10, No. 2 (report)

- ↑ Hans Berliner, Gordon Goetsch, Murray Campbell, Carl Ebeling (1990). Measuring the Performance Potential of Chess Programs. Artificial Intelligence, Vol. 43, No. 1

- ↑ Chess in 2010 by Ed Schröder, The Berliner Experiment

- ↑ The end of computer chess progress? by Ed Schröder, rgcc, March 06, 1999

- ↑ Re: Intelligent software, please by Ed Schröder, CCC, November 26, 2001

- ↑ Schröder, Ed (German) from Schachcomputer.info - Wiki also refers the post by Ed Schröder

- ↑ Re: Search or Evaluation? by Mark Uniacke, Hiarcs Forum, October 14, 2007

- ↑ UCI Machine Learning Repository: Chess (King-Rook vs. King-Pawn) Data Set

- ↑ Patanjali from Wikipedia

- ↑ Dap Hartmann (1988). Alen D. Shapiro: Structured Induction in Expert Systems. ICCA Journal, Vol. 11, No. 4

- ↑ Knowledge discovery from Wikipedia

- ↑ Dap Hartmann (2010). How can Humans learn from Computers? Review on Matej Guid's Ph.D. thesis, ICGA Journal, Vol. 33, No. 4

- ↑ Original ZDF broadcast "Sonntagskonzert", September 17, 1972, recorded at Kongresshalle Deutschen Museum, Munich, "Jazz Now", August 17, 1972, curator Joachim-Ernst Berendt, see The Mahavishnu Orchestra On Film, MUENCHEN-72_KPL.pdf, Diese Woche im Fernsehen, Der Spiegel 38/1972 (German), 1972 Summer Olympics