Simulated Annealing

Home * Programming * Algorithms * Simulated Annealing

Simulated Annealing, (SA)

a Monte Carlo based algorithm for combinatorial optimization problems inspired by statistical mechanics in thermodynamics with the statistical ensemble of the probability distribution over all possible states of a system described by a Markov chain, where its stationary distribution converts to an optimal distribution during a cooling process after reaching the equilibrium. Thus, the annealing algorithm simulates a nonstationary finite state Markov chain whose state space is the domain of the cost function called energy to be minimized [2].

Contents

History

The annealing algorithm is an adaptation of the Metropolis–Hastings algorithm to generate sample states of a thermodynamic system, invented by Marshall Rosenbluth and published by Nicholas Metropolis et al. in 1953 [3] [4] , later generalized by W. Keith Hastings at University of Toronto [5]. According to Roy Glauber and Emilio Segrè, the original algorithm was invented by Enrico Fermi and reinvented by Stanislaw Ulam [6].

SA was independently described by Scott Kirkpatrick, C. Daniel Gelatt and Mario P. Vecchi in 1983 [7], at that time affiliated with IBM Thomas J. Watson Research Center, Yorktown Heights, and by Vlado Černý from Comenius University, Bratislava in 1985 [8].

Quotes

In the 2003 conference proceedings Celebrating the 50th Anniversary of the Metropolis Algorithm [9] [10], Marshall Rosenbluth describes the algorithm in the following beautifully concise and clear manner [11]:

A simple way to do this [sampling configurations with the Boltzmann weight], as emerged after discussions with Teller, would be to make a trial move: if it decreased the energy of the system, allow it; if it increased the energy, allow it with probability exp(−ΔE/kT) as determined by a comparison with a random number. Each step, after an initial annealing period, is counted as a member of the ensemble, and the appropriate ensemble average of any quantity determined.

Applications

SA has multiple applications in discrete NP-hard optimization problems such as the Travelling salesman problem, in machine learning, in training of neural networks, and in the domain of computer games and computer chess in automated tuning as elaborated by Peter Mysliwietz in his Ph.D. thesis [12] to optimize the evaluation weight vector in Zugzwang. In its variant of temporal difference learning to adjust pattern weights in Morph, Robert Levinson at al. used simulated annealing as metaheuristic to set its own learning rate for each pattern, the more frequently a pattern is updated, the slower becomes its learning rate [13] [14] [15].

Algorithm

Description

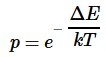

The control flow of the algorithm is determined by two nested loops, the outer loop over decreasing temperature simulates the cooling, and an inner loop times n Monte Carlo iterations. Each time a randomly picked neighbor state inside the inner loop provides a better energy or fitness than the current state, the neighbor becomes the new current and even new optimum if fitter than fittest so far. Otherwise, if the neighbor fitness does not exceed current, it might still become current depending on the positive fitness or energy difference ΔE, and absolute temperature T, with a probability p according to the Boltzmann factor:

where k the Boltzmann constant, and e base of the exponential function whose negative exponent ensures the [0, 1] probability interval. Accepting worse solutions is a primary feature of SA, and important to stop greedy exploitation a local optimum but to explore other areas - higher temperatures favor exploration, while decreasing temperatures make the algorithm to behave greedier in favoring exploitation of the hopefully global optimum.

Animation

Simulated annealing - searching for a maximum. [16]

With the high temprature, the numerous local maxima are left quickly through the strong noise movement -

but the global maximum is reliably found because of cooling temperature is no longer sufficient to leave it.

Pseudo Code

The C like pseudo code is based on Peter Mysliwietz' description as given in his Ph.D. thesis [17]. Several neighbor functions used to modify the weight vector were tried, where one randomly chosen element changed randomly performed well. The fitness function inside the inner loop is of course the most time consuming part. For Zugzwang, Mysliwietz used a database of 500 test-positions with a search depth of one ply, which took about three minutes on a T 800 Transputer per iteration - the higher the hit rate of found expert moves, the fitter. The whole optimization used a tHight to tLow ratio of 100, a reduction factor r of 0.95, and n=40 inner iterations.

/**

* simulatedAnnealing

* @author Peter Mysliwietz, slightly modified

* @param tHigh is the start temperature

* @param tLow is the minimal end temperature

* @param r is the temperature reduction factor < 1.0

* @param n number of iterations for each temperature

* @return best weight vector

*/

vector simulatedAnnealing(double tHigh, double tLow, double r, int n) {

vector currentWeights = randomWeights();

vector bestWeights = currentWeights;

double fittest = fitness(currentWeights);

for (double t = tHigh; t > tLow; t *= r) {

for (int i = 0; i < n; ++i) {

vector neighborWeights = neighbor(currentWeights);

if ( fitness(neighborWeights ) > fitness(currentWeights) ) {

currentWeights = neighborWeights;

if ( fitness(neighborWeights ) > fittest ) {

fittest = fitness(neighborWeights);

bestWeights = neighborWeights;

}

} else if (accept( fitness(currentWeights) - fitness(neighborWeights ), t) ) {

currentWeights = neighborWeights;

}

} /* for i */

} /* for t */

return bestWeights;

}

/**

* accept

* @param d is the energy difference >= 0

* @param t is the current temperature

* @return true with probability of Boltzmann factor e^(-d/kt)

*/

bool accept(double d, double t ) {

const double k = 1.38064852e−23; /* joule / kelvin */

double p = exp(-d / (k*t) );

double r = rand() / (RAND_MAX + 1.0);

return r < p;

}

See also

- Automated Tuning

- Genetic Programming

- Iteration

- Learning

- Monte-Carlo Tree Search

- SPSA

- Trial and Error

Selected Publications

1948 ...

- Enrico Fermi, Robert D. Richtmyer (1948). Note on census-taking in Monte Carlo calculations. Los Alamos National Laboratory, pdf

- Nicholas Metropolis, Stanislaw Ulam (1949). The Monte Carlo Method. Journal of the American Statistical Association, Vol. 44, No. 247, pdf

1950 ...

- Nicholas Metropolis, Arianna W. Rosenbluth, Marshall N. Rosenbluth, Augusta H. Teller, Edward Teller (1953). Equation of State Calculations by Fast Computing Machines. Journal of Chemical Physics, Vol. 21, No. 6

- Marshall N. Rosenbluth, Arianna W. Rosenbluth (1954). Further Results on Monte Carlo Equations of State. Journal of Chemical Physics, Vol. 22, No. 5, pdf

1970 ...

- W. Keith Hastings (1970). Monte Carlo Sampling Methods Using Markov Chains and Their Applications. University of Toronto, Biometrika, Vol. 57, No. 1, pdf

1980 ...

- Scott Kirkpatrick, C. Daniel Gelatt, Mario P. Vecchi (1983). Optimization by Simulated Annealing. Science, Vol. 220, No. 4598, pdf

- Vlado Černý (1985). Thermodynamical approach to the traveling salesman problem: An efficient simulation algorithm. Journal of Optimization Theory and Applications, Vol. 45, No. 1

- Saul B. Gelfand, Sanjoy K. Mitter (1985). Analysis of simulated annealing for optimization. 24th IEEE Conference on Decision and Control

- Saul B. Gelfand (1987). Analysis of Simulated Annealing Type Algorithms. Ph.D. thesis, MIT, advisor: Sanjoy K. Mitter, pdf

- Nicholas Metropolis (1987). The Beginning of the Monte Carlo Method. Los Alamos Science Special, pdf

- Rob A. Rutenbar (1989). Simulated Annealing Algorithms. IEEE Circuits and Devices Magazine, pdf

1990 ...

- Gunter Dueck, Tobias Scheuer (1990). Threshold Accepting: A General Purpose Optimization Algorithm Appearing Superior to Simulated Annealing. Journal of Computational Physics, Vol. 90, No. 1 [21]

- Ingo Althöfer, Klaus-Uwe Koschnick (1991). On the convergence of “Threshold Accepting”. Applied Mathematics and Optimization, Vol. 24, No. 1

- Andrey Grigoriev (1991). Artificial Intelligence or Stochastic Relaxation: Simulated Annealing Challenge. Heuristic Programming in AI 2

- Bernd Brügmann (1993). Monte Carlo Go. pdf

- René V. V. Vidal (ed.) (1993). Applied Simulated Annealing. Lecture Notes in Economics and Mathematical Systems, Vol. 396, Springer

- Robert Levinson (1994). Experience-Based Creativity. Artificial Intelligence and Creativity: An Interdisciplinary Approach, Kluwer

- Peter Mysliwietz (1994). Konstruktion und Optimierung von Bewertungsfunktionen beim Schach. Ph.D. thesis (German)

- Olivier C. Martin, Steve Otto (1996). Combining simulated annealing with local search heuristics. Annals of Operations Research, Vol. 63, No. 1, Springer

- James C. Spall (1999). Stochastic Optimization: Stochastic Approximation and Simulated Annealing. in John G. Webster (ed.) (1999). Encyclopedia of Electrical and Electronics Engineering, Vol. 20, John Wiley & Sons, pdf

2000 ...

- Ari Shapiro, Gil Fuchs, Robert Levinson (2002). Learning a Game Strategy Using Pattern-Weights and Self-play. CG 2002, pdf

- Eric Triki, Yann Collette, Patrick Siarry (2002). Empirical study of Simulated Annealing aimed at improved multiobjective optimization. Research Paper

- James Gubernatis (ed.) (2003). The Monte Carlo Method in Physical Sciences: Celebrating the 50th Anniversary of the Metropolis Algorithm. AIP Conference Proceedings [22]

- Daniel Walker, Robert Levinson (2004). The MORPH Project in 2004. ICGA Journal, Vol. 27, No. 4

- James Gubernatis (2005). Marshall Rosenbluth and the Metropolis Algorithm. Physics of Plasmas, Vol. 12, No. 5, pdf

- Eric Triki, Yann Collette, Patrick Siarry (2005). A Theoretical Study on the Behavior of Simulated Annealing leading to a new Cooling Schedule. European Journal of Operational Research, Vol. 166, No. 1

2010 ...

- Todd W. Neller, Christopher J. La Pilla (2010). Decision-Theoretic Simulated Annealing. FLAIRS Conference 2010

- Zbigniew J. Czech, Wojciech Mikanik, Rafał Skinderowicz (2010). Implementing a Parallel Simulated Annealing Algorithm. Lecture Notes in Computer Science, Volume 6067, Springer

- Rafał Skinderowicz (2011). Co-operative, Parallel Simulated Annealing for the VRPTW. Computational Collective Intelligence. Technologies and Applications, Lecture Notes in Computer Science, Springer [23]

- Peter Rossmanith (2011). Simulated Annealing. in Berthold Vöcking et al. (eds.) (2011). Algorithms Unplugged. Springer

- Alan J. Lockett, Risto Miikkulainen (2011). Real-Space Evolutionary Annealing. GECCO 2011

- Alan J. Lockett (2012). General-Purpose Optimization Through Information Maximization. Ph.D. thesis, University of Texas at Austin, advisor Risto Miikkulainen, pdf

- Ben Ruijl, Jos Vermaseren, Aske Plaat, Jaap van den Herik (2013). Combining Simulated Annealing and Monte Carlo Tree Search for Expression Simplification. CoRR abs/1312.0841

- Alan J. Lockett, Risto Miikkulainen (2014). Evolutionary Annealing: Global Optimization in Arbitrary Measure Spaces. Journal of Global Optimization, Vol. 58

Forum Posts

- Re: Parameter tuning by Rémi Coulom, CCC, March 23, 2011 » Automated Tuning

- Idea for Automatic Calibration of Evaluation Function... by Steve Maughan, CCC, January 22, 2010

External Links

Simulated Annealing

- Simulated annealing from Wikipedia

- Adaptive simulated annealing from Wikipedia

- Simulated Annealing from Wolfram MathWorld

- Algorithmus der Woche - Informatikjahr 2006 by Peter Rossmanith, RWTH Aachen (German)

- Simulated Annealing Tutorial by John D. Hedengren, Brigham Young University

- The Simulated Annealing Algorithm by Katrina Ellison Geltman, February 20, 2014

Related Topics

- Combinatorial optimization from Wikipedia

- Cross-entropy method from Wikipedia

- Expectation–maximization algorithm from Wikipedia

- Gibbs sampling from Wikipedia

- Global optimization from Wikipedia

- Greedy algorithm from Wikipedia

- Hill climbing from Wikipedia

- Local search (optimization) from Wikipedia

- Markov chain Monte Carlo from Wikipedia

- Monte Carlo method from Wikipedia

- Quantum annealing from Wikipedia

- Simultaneous perturbation stochastic approximation from Wikipedia

- Stochastic gradient descent from Wikipedia

- Stochastic optimization from Wikipedia

- Tabu search from Wikipedia

Misc

- Annealing (metallurgy) from Wikipedia

- Esperanza Spalding - Good Lava, Emily's D+Evolution, YouTube Video

References

- ↑ train wheel production, Bochumer Verein, Bochum, Germany, ExtraSchicht 2010, The Industrial Heritage Trail, image by Rainer Halama, June 19, 2010, CC BY-SA 3.0, Wikimedia Commons, Glühen from Wikipedia.de (German)

- ↑ Saul B. Gelfand, Sanjoy K. Mitter (1985). Analysis of simulated annealing for optimization. 24th IEEE Conference on Decision and Control

- ↑ Nicholas Metropolis, Arianna W. Rosenbluth, Marshall N. Rosenbluth, Augusta H. Teller, Edward Teller (1953). Equation of State Calculations by Fast Computing Machines. Journal of Chemical Physics, Vol. 21, No. 6

- ↑ Nicholas Metropolis (1987). The Beginning of the Monte Carlo Method. Los Alamos Science Special, pdf

- ↑ W. Keith Hastings (1970). Monte Carlo Sampling Methods Using Markov Chains and Their Applications. University of Toronto, Biometrika, Vol. 57, No. 1, pdf

- ↑ Metropolis–Hastings algorithm from Wikipedia

- ↑ Scott Kirkpatrick, C. Daniel Gelatt, Mario P. Vecchi (1983). Optimization by Simulated Annealing. Science, Vol. 220, No. 4598, pdf

- ↑ Vlado Černý (1985). Thermodynamical approach to the traveling salesman problem: An efficient simulation algorithm. Journal of Optimization Theory and Applications, Vol. 45, No. 1

- ↑ The Monte Carlo Method in Physical Sciences: Celebrating the 50th Anniversary of the Metropolis Algorithm

- ↑ James Gubernatis (ed.) (2003). The Monte Carlo Method in Physical Sciences: Celebrating the 50th Anniversary of the Metropolis Algorithm. AIP Conference Proceedings

- ↑ James Gubernatis (2005). Marshall Rosenbluth and the Metropolis Algorithm. Physics of Plasmas, Vol. 12, No. 5, pdf

- ↑ Peter Mysliwietz (1994). Konstruktion und Optimierung von Bewertungsfunktionen beim Schach. Ph.D. thesis (German)

- ↑ Robert Levinson (1994). Experience-Based Creativity. Artificial Intelligence and Creativity: An Interdisciplinary Approach, Kluwer

- ↑ Ari Shapiro, Gil Fuchs, Robert Levinson (2002). Learning a Game Strategy Using Pattern-Weights and Self-play. CG 2002, pdf

- ↑ Johannes Fürnkranz (2000). Machine Learning in Games: A Survey. Austrian Research Institute for Artificial Intelligence, OEFAI-TR-2000-3, pdf

- ↑ Start temperature: 25 step: 0.1 End temperature: 0 - 1,000,000 iterations at each temperature: Animated GIF Hill Climbing with Simulated Annealing by Kingpin13, Wikimedia Commons, Simulated annealing from Wikipedia

- ↑ Peter Mysliwietz (1994). Konstruktion und Optimierung von Bewertungsfunktionen beim Schach. Ph.D. thesis, 7.4. Simulated Annealing, 7.4.2. Beschreibung des Algorithmus, Abb. 29, pp. 146 (German)

- ↑ Exponential function from Wikipedia

- ↑ C mathematical functions - Random number generation from Wikipedia

- ↑ Monte Carlo History by Dario Bressanini

- ↑ Schwellenakzeptanz from Wikipedia.de (German)

- ↑ The Monte Carlo Method in Physical Sciences: Celebrating the 50th Anniversary of the Metropolis Algorithm

- ↑ Vehicle routing problem from Wikipedia