Kathe Spracklen

Home * People * Kathe Spracklen

Kathleen (Kathe) Spracklen,

an American mathematician, computer scientist and microcomputer chess pioneer. Along with her husband Dan, Kathe started chess programming in 1977 on a Z-80 based Wavemate Jupiter III [2] in assembly language. Their first program, Sargon [3] had a one or two ply search without quiescence but exchange evaluation [4]. After the success at The Second West Coast Computer Faire MCCT in March 1978, and the shared third place at ACM 1978, the Spracklens became professional computer chess programmers. Sargon II was ported to various early home computers, for instance TRS-80 and 6502 based Apple II [5], as well as dedicated units as Chafitz ARB Sargon 2.5 [6].

Contents

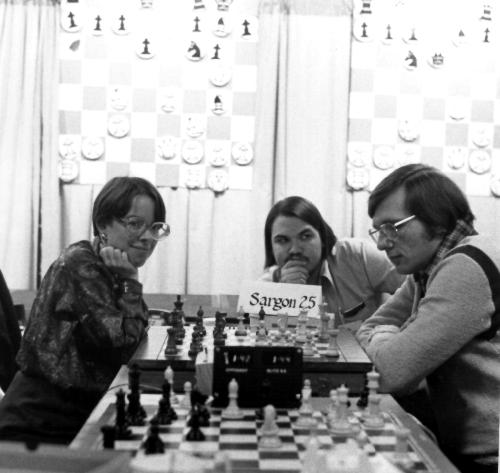

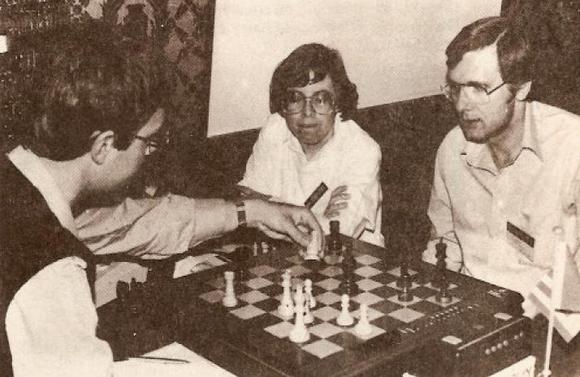

Photos

Kathe Spracklen and Dan Spracklen (far right) at ACM 1979 [7]

Kathe Spracklen and Dan Spracklen, WCCC 1983 [8]

Fidelity

After civil proceedings between manufacturer Applied Concepts and their sales company Chafitz, who first marketed their Sargon 2.5 program, the Spracklens began their long term collaboration with Sidney Samole and Fidelity Electronics in the 80s. Beside other successes, their computers won the first four World Microcomputer Chess Championships, Chess Challenger the WMCCC 1980, Fidelity X the WMCCC 1981, Elite Auto Sensory the WMCCC 1983 and Fidelity Elite X the WMCCC 1984 (shared).

Saitek

In 1989 or 1990, soon after Samole sold Fidelity to Hegener & Glaser, Kathe and Dan Spracklen started to work for Eric Winkler and Saitek, and developed a program for Sparc processors. The loss of Kasparov Sparc against Ed Schröder's ChessMachine [9] in Madrid 1992, almost ended their involvement in computer chess when they didn't win that world title [10].

Donald Michie

Kathleen Spracklen on Donald Michie, excerpt from Oral History [11]:

One of the most thrilling times of my entire life was the month that Dr. Donald Michie spent with us in San Diego. And he, of course, is - was head of the computer science department at Oxford, I believe, for decades. He was on the original team with Turing that broke the Enigma code. And already he was quite an elderly gentleman when he came to work with us but that wasn't stopping him from having a very full schedule as the head of the I think it was the Turing Institute in Glasgow that headed up. Quite, totally an amazing human being.

Delightful and totally amazing. And he had this concept that he wanted to try out that he thought might possibly solve computer chess. And we spent a month exploring it. It was the idea of reaching a steady state. The idea was that you would establish a number of parameters of positional analysis and your program would score, independently score vast arrays of positions using this set of known parameters. And then the program would basically perform a cluster analysis and so you'd do it on a number of positions and on game after game after game of Grand Master Chess. You submitted we just we used hundreds of thousands of positions.

And then what it did was it took the evaluation that known chess theories said this position is worth this much. So we had an external evaluation because it came out of known Master Chess games. And then we had all of these parameters that our program was capable of evaluating and then you used this data to tune your weighing of the parameters. And you could also tune the weighting for different stages of the game. So at the opening, you could use a certain weight, mid-game, you could use a certain weight, in the midst of your king being attacked, could use a set of weights, when you're pressing an attack, you could use a set of weights, when they're past pawns on the board, you know, there were several different stages of the game that could have different weightings. And we used a program called Knowledge Seeker that helped you to determine these relative weightings. And so after a month of training the program, what you basically did was you take your total set of positions and you would use something like 80% of them as a training set and then the last 20 as the test set. And you'd find out, well, how did the program do in evaluating these positions it had never seen based on these that it had seen. And it did just a breathtaking job of determining the correct worth of the positions. And so we were so excited. We were going to turn it loose on its first play a game of chess. We were going to use this as the positional evaluator.

Yeah. It was, like, oh, it was breathtaking. And we watched the program play chess. It was- you could gasp for breath. No computer program ever played a game of chess like that. It looked like an incredibly promising seven-year-old. We lost the game in just a few moves but it lost it brilliantly. <laughter> It got its queen out there, it maneuvered its knight, it launched a king side attack, it sacrificed its queen. <laughter> Well, of course it sacrificed its queen. Do you realize, in every single Grand Master game of chess, when you sacrifice your queen, it's phenomenally brilliant. You are winning the game. So if you can find a way to get your queen out there and sacrifice her, well, you've won.

See also

Selected Publications

1978 ...

- Dan Spracklen, Kathe Spracklen (1978). SARGON: A Computer Chess Program. Hayden Books, amazon.com

- Dan Spracklen, Kathe Spracklen (1978). First Steps in Computer Chess Programming. BYTE, Vol. 3, No. 10, pdf from The Computer History Museum

- Dan Spracklen, Kathe Spracklen (1978). An Exchange Evaluator for Computer Chess. BYTE, Vol. 3, No. 11

- Kathe Spracklen (1978). The Making of Sargon. Personal Computing, Vol. 2, No. 12, pp. 62

- Kathe Spracklen (1979). The Playing of Sargon. Personal Computing, Vol. 3, No. 1, pp. 70 » MCCT 1978,

- Kathe Spracklen (1979). Micro Graphics and X-Y Plotter. Personal Computing, Vol. 3, No. 2, pp. 75

- Kathe Spracklen (1979). Z-80 and 8080 assembly language programming. Hayden Books, amazon.com, Internet Archive

- Kathe Spracklen (1979). Second Annual European Microcomputer Chess Championship - Results and Authors. ICCA Newsletter, Vol. 2, No. 2 » PCW-MCC 1979

1980 ...

- Kathe Spracklen (1981). The Past, Present, and Future. Personal Computing, Vol. 5, No. 4, pp. 67

- Ben Mittman, Tony Marsland, Monroe Newborn, Kathe Spracklen, Ken Thompson (1981). Computer chess: Master level play in 1981? ACM 81: Proceedings of the ACM '81 conference

- Kathe Spracklen (1983). Tutorial: Representation of an Opening Tree. ICCA Newsletter, Vol. 6, No. 1

- Kathe Spracklen (1983). The Past and Future of Microcomputer Chess. Computer Chess Digest Annual 1983

- Göran Grottling (1989). Eine Lebende Legende - Ein Interview mit Kathe Spracklen. Modul 1/89, pp. 41-45 (German),pdf hosted by Hein Veldhuis

2005

- Gardner Hendrie (2005). Oral History of Kathe and Dan Spracklen. pdf from The Computer History Museum

External Links

- Kathe Spracklen's ICGA Tournaments

- Dan and Kathe Spracklen from ChessComputers.org

- Search the Mastering the Game exhibition and on-line collection from The Computer History Museum

- Oral History of Kathleen and Danny Spracklen, March 2, 2005 by Gardner Hendrie, The Computer History Museum

- Historic Pictures by Ed Schröder

- Computer Chess by Dan and Kathe Spracklen from AtariArchives.org - archiving vintage computer books, information, and software

- Computer Chronicles: Computer Games (1985) from the Internet Archive, "Lady Sargon" at 4:10, also as YouTube Video

References

- ↑ László Lindner, A SZÁMÍTÓGÉPES SAKK KÉPEKBEN című melléklete - The pictures of the Beginning of Chess Computers

- ↑ Jupiter II-1975

- ↑ Sargon from Wikipedia

- ↑ Sargon Z80 assembly listing by Dan and Kathe Spracklen, hosted by Andre Adrian

- ↑ Sargon II from C64-Wiki (German)

- ↑ Chafitz ARB Sargon 2.5

- ↑ Sargon 2.5 at 10th ACM North American Computer Chess Championship in Detroit, Michigan, by Monroe Newborn, from The Computer History Museum

- ↑ László Lindner, A SZÁMÍTÓGÉPES SAKK KÉPEKBEN című melléklete - The pictures of the Beginning of Chess Computers

- ↑ Madrid 1992, Chess, Round 5, Game 7 from the ICGA Tournament Database

- ↑ Gardner Hendrie (2005). Oral History of Kathe and Dan Spracklen. pdf from The Computer History Museum

- ↑ Gardner Hendrie (2005). Oral History of Kathe and Dan Spracklen. pdf from The Computer History Museum