Difference between revisions of "Stockfish NNUE"

(→Strong Points) |

GerdIsenberg (talk | contribs) |

||

| Line 1: | Line 1: | ||

'''[[Main Page|Home]] * [[Engines]] * [[Stockfish]] * NNUE''' | '''[[Main Page|Home]] * [[Engines]] * [[Stockfish]] * NNUE''' | ||

| − | [[FILE:Sfnnue.png|border|right|thumb|250px]] | + | [[FILE:Sfnnue.png|border|right|thumb|250px| Stockfish NNUE Logo <ref>Stockfish NNUE Logo from [https://github.com/nodchip/Stockfish GitHub - nodchip/Stockfish: UCI chess engine] by [[Hisayori Noda|Nodchip]]</ref> ]] |

'''Stockfish NNUE''',<br/> | '''Stockfish NNUE''',<br/> | ||

| Line 16: | Line 16: | ||

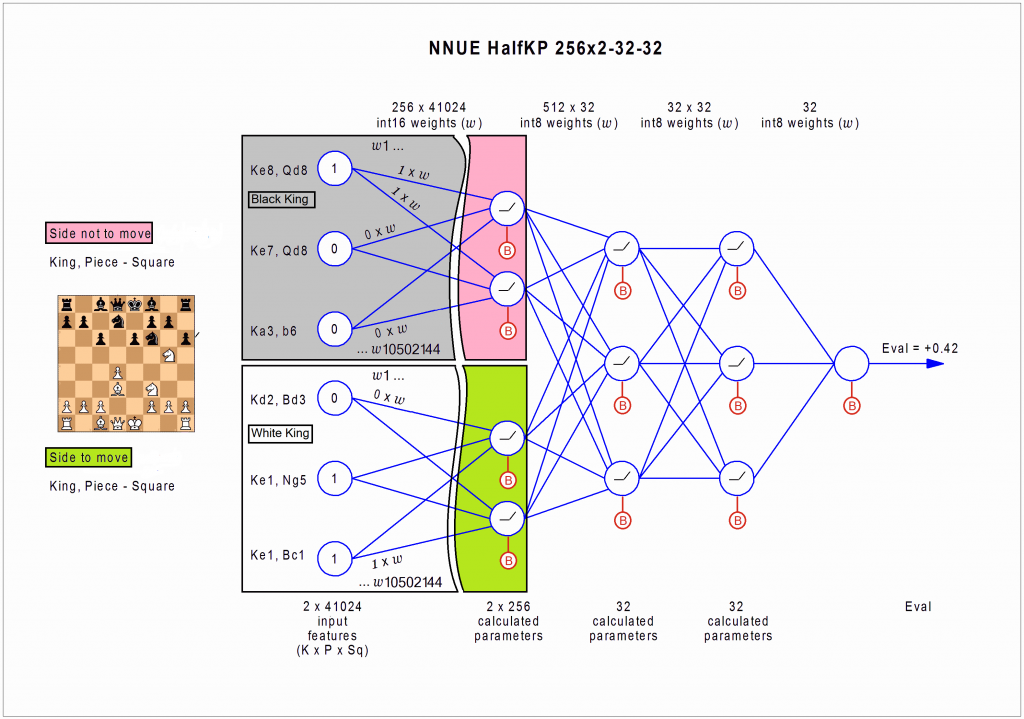

The [[Neural Networks|neural network]] consists of four layers. The input layer is heavily overparametrized, feeding in the [[Board Representation|board representation]] for all king placements per side. | The [[Neural Networks|neural network]] consists of four layers. The input layer is heavily overparametrized, feeding in the [[Board Representation|board representation]] for all king placements per side. | ||

The efficiency of [[NNUE]] is due to [[Incremental Updates|incremental update]] of the input layer outputs in [[Make Move|make]] and [[Unmake Move|unmake move]], | The efficiency of [[NNUE]] is due to [[Incremental Updates|incremental update]] of the input layer outputs in [[Make Move|make]] and [[Unmake Move|unmake move]], | ||

| − | where only a tiny fraction of its neurons need to be considered <ref>[http://www.talkchess.com/forum3/viewtopic.php?f=7&t=74531&start=1 Re: NNUE accessible explanation] by [[Jonathan Rosenthal]], [[CCC]], July 23, 2020</ref>. The remaining three layers with 256x2x32-32x32-32x1 neurons are computational less expensive, best calculated using appropriate [[SIMD and SWAR Techniques|SIMD instructions]] performing fast [[Word|16-bit integer]] arithmetic, like [[AVX2]] on [[x86-64]], or if available, [[AVX-512]]. | + | where only a tiny fraction of its neurons need to be considered <ref>[http://www.talkchess.com/forum3/viewtopic.php?f=7&t=74531&start=1 Re: NNUE accessible explanation] by [[Jonathan Rosenthal]], [[CCC]], July 23, 2020</ref>. The remaining three layers with 256x2x32-32x32-32x1 neurons are computational less expensive, best calculated using appropriate [[SIMD and SWAR Techniques|SIMD instructions]] performing fast [[Word|16-bit integer]] arithmetic, like [[SSE2]] or [[AVX2]] on [[x86-64]], or if available, [[AVX-512]]. |

[[FILE:StockfishNNUELayers.png|none|border|text-bottom|1024px]] | [[FILE:StockfishNNUELayers.png|none|border|text-bottom|1024px]] | ||

| Line 91: | Line 91: | ||

[[Category:Fish]] | [[Category:Fish]] | ||

[[Category:Demonology]] | [[Category:Demonology]] | ||

| − | |||

[[Category:David Fiuczynski]] | [[Category:David Fiuczynski]] | ||

[[Category:Hiromi Uehara]] | [[Category:Hiromi Uehara]] | ||

Revision as of 14:07, 2 August 2020

Home * Engines * Stockfish * NNUE

Stockfish NNUE,

a Stockfish branch by Hisayori Noda aka Nodchip, which uses Efficiently Updatable Neural Networks - stylized as ƎUИИ or reversed as NNUE - to replace its standard evaluation.

NNUE, introduced in 2018 by Yu Nasu [2],

were previously successfully applied in Shogi evaluation functions embedded in a Stockfish based search [3], such as YaneuraOu [4],

and Kristallweizen-kai [5].

In 2019, Nodchip incorporated NNUE into Stockfish 10 - as a proof of concept, and with the intention to give something back to the Stockfish community [6].

After support and announcements by Henk Drost in May 2020 [7]

and subsequent enhancements, Stockfish NNUE was established and recognized. In summer 2020, with more people involved in testing and training,

the computer chess community bursts out enthusiastically due to its rapidly raising playing strength with different networks trained using a mixture of supervised and reinforcement learning methods. Despite the approximately halved search speed, Stockfish NNUE seemingly became stronger than its original [8]. In July 2020, the playing code of NNUE is put into the official Stockfish repository as a branch for further development and examination. However, the training code still remains in Nodchip's repository [9] [10].

Contents

NNUE Structure

The neural network consists of four layers. The input layer is heavily overparametrized, feeding in the board representation for all king placements per side. The efficiency of NNUE is due to incremental update of the input layer outputs in make and unmake move, where only a tiny fraction of its neurons need to be considered [11]. The remaining three layers with 256x2x32-32x32-32x1 neurons are computational less expensive, best calculated using appropriate SIMD instructions performing fast 16-bit integer arithmetic, like SSE2 or AVX2 on x86-64, or if available, AVX-512.

NNUE layers in action [12]

Strong Points

- Reuses and gets benefits from the very optimized search function of Stockfish as well as almost all Stockfish's code

- Runs with CPU only, doesn't require expensive video cards, and the need for installing video drivers and specific libraries, thus it becomes much easier to install (compare with other NN engines such as Leela Chess Zero) for users and can run with almost all modern computers

- Requires much smaller training sets. Some high score networks can be built with the effort of one or a few people within a few days. It doesn't require the massive computing from a supercomputer and/or from community

See also

Forum Posts

2020 ...

January ...

- The Stockfish of shogi by Larry Kaufman, CCC, January 07, 2020 » Shogi

- Stockfish NNUE by Henk Drost, CCC, May 31, 2020 » Stockfish

- Stockfish NN release (NNUE) by Henk Drost, CCC, May 31, 2020

- nnue-gui 1.0 released by Norman Schmidt, CCC, June 17, 2020

- stockfish-NNUE as grist for SF development? by Warren D. Smith, FishCooking, June 21, 2020

July

- Can the sardine! NNUE clobbers SF by Henk Drost, CCC, July 16, 2020

- End of an era? by Michel Van den Bergh, FishCooking, July 20, 2020

- Sergio Vieri second net is out by Sylwy, CCC, July 21, 2020

- NNUE accessible explanation by Martin Fierz, CCC, July 21, 2020

- Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, July 23, 2020

- Stockfish NNUE by Lion, CCC, July 25, 2020

- SF-NNUE going forward... by Zenmastur, CCC, July 27, 2020

- 7000 games testrun of SFnnue sv200724_0123 finished by Stefan Pohl, FishCooking, July 26, 2020

- New sf+nnue play-only compiles by Norman Schmidt, CCC, July 27, 2020

- Stockfish+NNUEsv +80 Elo vs Stockfish 17jul !! by Kris, Rybka Forum, July 28, 2020

- LC0 vs. NNUE - some tech details... by Srdja Matovic, CCC, July 29, 2020 » Lc0

- Stockfish NNUE and testsuites by Jouni Uski, CCC, July 29, 2020

- Stockfish NNue [download ] by Ed Schröder, CCC, July 30, 2020

External Links

Chess Engine

- Stockfish NNUE – The Complete Guide, June 19, 2020 (Japanese and English)

- GitHub - nodchip/Stockfish: UCI chess engine by Nodchip

- GitHub - joergoster/Stockfish-NNUE: UCI Chess engine Stockfish with an Efficiently Updatable Neural-Network-based evaluation function hosted by Jörg Oster

- GitHub - FireFather/sf-nnue: Stockfish NNUE (efficiently updateable neural network) by Norman Schmidt

- GitHub - FireFather/nnue-gui: basic windows application for using nodchip's stockfish-nnue software by Norman Schmidt

- Stockfish NNUE Wiki

- NNUE merge · Issue #2823 · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 25, 2020 [13]

- GitHub - official-stockfish/networks: Evaluation networks for Stockfish

- Index of /~sergio-v/nnue by Sergio Vieri

- Stockfish NNUE | Home of the Dutch Rebel hosted by Ed Schröder

- Stockfish NNUE Development Versions

- Stockfish+NNUE 150720 64-bit 4CPU in CCRL Blitz

Misc

- Stockfish from Wikipedia

- Nue from Wikipedia

- Ikuchi from Wikipedia

- Hiromi’s Sonicbloom - Ue Wo Muite Aruko, Tokyo Jazz, 2008, YouTube Video

References

- ↑ Stockfish NNUE Logo from GitHub - nodchip/Stockfish: UCI chess engine by Nodchip

- ↑ Yu Nasu (2018). ƎUИИ Efficiently Updatable Neural-Network based Evaluation Functions for Computer Shogi. Ziosoft Computer Shogi Club, pdf (Japanese with English abstract)

- ↑ The Stockfish of shogi by Larry Kaufman, CCC, January 07, 2020

- ↑ GitHub - yaneurao/YaneuraOu: YaneuraOu is the World's Strongest Shogi engine(AI player), WCSC29 1st winner, educational and USI compliant engine

- ↑ GitHub - Tama4649/Kristallweizen: 第29回世界コンピュータ将棋選手権 準優勝のKristallweizenです。

- ↑ Stockfish NNUE – The Complete Guide, June 19, 2020 (Japanese and English)

- ↑ Stockfish NN release (NNUE) by Henk Drost, CCC, May 31, 2020

- ↑ Can the sardine! NNUE clobbers SF by Henk Drost, CCC, July 16, 2020

- ↑ NNUE merge · Issue #2823 · official-stockfish/Stockfish · GitHub by Joost VandeVondele, July 25, 2020

- ↑ GitHub - nodchip/Stockfish: UCI chess engine by Nodchip

- ↑ Re: NNUE accessible explanation by Jonathan Rosenthal, CCC, July 23, 2020

- ↑ Image courtesy Roman Zhukov, revised version of the image posted in Re: Stockfish NN release (NNUE) by Roman Zhukov, CCC, June 17, 2020

- ↑ An info by Sylwy, CCC, July 25, 2020