Eval Tuning in Deep Thought

Home * Automated Tuning * Eval Tuning in Deep Thought

Eval Tuning in Deep Thought,

this page is a formatted reprint of Andreas Nowatzyk's explanations of the Eval Tuning source code [1] of Deep Thought from January 2000 [2] , hosted by Tim Mann [3] :

Andreas Nowatzyk was one of the contributors to the Deep Thought project while he was in grad school. A few years ago when he and I were both working for Compaq's research labs in Palo Alto, Andreas sent me a copy of Deep Thought's evaluation function tuning program and asked me to put it on the Web for him, since he no longer has an interest in computer chess.

Contents

The Files

in this directory constitute the tuning program that was used by Deep Thought to adjust its evaluation function parameters based on a set of some 868 grand-master games. I forgot where these games came from, but we did not type them in. It was last used in the summer of 1988 and it is believed that this program might be of historical interest to some chess programmers. The Deep Thought hardware is probably no longer functional and without the actual Deep Thought program, this code can only show how DT evaluated chess positions, but it can not play any chess.

This program was written by me during the Spring of 1988 and included suggestions and feedback from the entire Deep Thought team (Feng H. Hsu, Thomas S. Anantharaman, Murray S. Campbell, Mike C. Browne and myself). It includes the DT evaluation function, which was developed by Murray.

This file gives a brief description how this tuning program worked and how to use it. Expect some errors and omissions because I'm writing this from memory, 12 years after I last touched this code.

The Basic Method

The basic method used the mathematical concept of least square fitting. This was hardly new and it had been applied to chess evaluation functions before. However, there are plenty of details on exactly how to go about this, and our approach likely differed from earlier work.

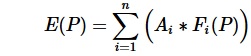

Let's suppose that the evaluation function is a weighted sum of positional features (later referred to as a feature vector):

(= position of pieces on the board plus castle and enpassant status), the evaluation <E(P)> is the sum of the features recognized by Deep Thought <Fi(P)> times the weight given to each feature <Ai>. For example, a feature may be the number of white pawns minus the number of black pawns. The corresponding weight would be the value for one pawn. There were roughly 100 features that Deep Though used. Some were implemented via a piece-placement table that could give a different weight for each piece depending on where it is on the board. For example, a gradient in the pawn value could be used to add a bonus for advanced pawns. King centrality was implemented likewise. There were five other tables in the hardware for more complex features, that could detect open files, passed/doubled pawns etc. (four pawn structure tables of 8192 entries each, a rook evaluation table with 2048 entries and the 1024 entry piece/placement table along with a few special bonus registers made up the DT evaluation hardware. While these nearly 40,000 programmable parameters of the DT hardware could be regarded as the components of DT's evaluation function, they all were derived from the 89 to ~100 parameters mentioned earlier). The basic DT move cycle consisted of computing these tables before every search so that the weights of the evaluation function could be adjusted according to the overall situation of the game (opening, mid-game, endgame, etc.). This took some time, so DT was not good at fast games (keep in mind that DT used '88 hardware and the VME interface from the host, a Sun 3 or 4, was not a spead deamon).

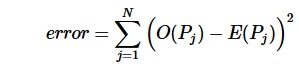

Now suppose you had an oracle that could give the correct evaluation for a position O(P). If we use this oracle on a sufficiently large set of positions <Pj> then we could minimize the squared evaluation error sum:

via partial differentiation of this expression for each parameter <Ai>. This leads to a linear equation system with one equation for each unknown parameter of DT's evaluation function. If the positions are sufficiently varied (they usually were), then this equation system can be solved and out come the best values for our evaluation parameters.

The trouble was, we did not have such an oracle. So the next best thing we had is the evaluation of DT. Murray made some initial guesses for each parameter <Ai> and we used that as a starting point. Obviously, if we use our own <E(P)> as an oracle, we get the same parameters out of the least square fit as we put in. So this is just a cumbersome way to compute the identity: New(Ai) = Old(Ai) for all <i=1..100>. However, this was a great debugging tool to see that we got the mathematics right.

In the tuning case, we did not just take the top-level evaluation, rather we let DT search shallow 3ply trees with quiescence extensions. The evaluation function is then computed symbolically: rather then plugging in values, we propagated the feature vector of the best leaf node to the top. The search itself was controlled by the current best guess of the evaluation parameters. These were full min/max searches, rather than alpha/beta searches. The tuning program cannot actually search these trees because it does not know what a legal chess move is. Instead, the actual DT was used to pre-search these trees and the results were stored in a database (dbf_all). The tuning program merely traverses these trees.

Now we are still lacking an oracle. Instead we assumed that in our collection of grand-master games, the winner of each games should serve as a substitute oracle for DT. Discarding the moves of the losing side and the first few opening moves, we considered all available moves for each positions. Each position was searched and evaluated. Suppose that there were 20 legal moves in one position <Px> then each of these moves lead to a new position <P0> ... <P19>, for which we get the evaluations <E(P0)> ... <E(P19)>. Let's assume that the winning GM played the move leading to <P0> from <Px>. Then there are two cases:

- DT's evaluation concurs, that is: E(P0) > E(P1...19)

- DT evaluated some other move as best.

So the objective of our tuning procedure was to maximize the first case and minimize the second case.

The GM games tell us which moves are better, relative to other moves. So rather than having an absolute oracle that assigns an absolute value to a position, we have a kind of relative oracle: The move from <Px> to <P0> is expected to be better than the moves from <Px> to <P1..19>. Hence <E(P0) - E(Px)> ought to be larger than <E(P1..19) - E(Px)>. These differences of evaluations were used for the tuning process, but this did not change much: we still end up with linear combinations of elements of the feature vector that can be dealt with via least square fitting. In the program and on the display, the evaluations <Ex>, <E0> and <En, n=1...> refer to the evaluation of the root position, the position of the winning GM move and the evaluation of move <n>.

Naturally, not all changes to the evaluation of the board are positional in nature and/or are captured by the evaluation function. The fact that the pre-computed search trees include the results after quiescence search should minimize differences due to exchanges, but this is not always the case. Therefore in order to avoid interference from elements that DT discovers via search and not via its evaluation function, the tuning program includes a threshold: if the evaluation of the root position and the position after a move differ by too much (more than 64 = half of a pawn value), then it is assumed that there is something going on that the evaluation function cannot grasp and this data-point is ignored (out-of-bound).

The first idea was to apply a force function. Instead of using <E(P)> as an oracle, we used <E(P) + G(P)> as an oracle. A simple force function <G(P)> would be used to add a constant force value if the position is the result of the GM move and 0 otherwise.

This doesn't really work as it leads to a value inflation. So instead of adding no bonus for the wrong positions, we add <- force/n>, where <n> is the number of wrong moves (positions).

This is in fact what an early version of the tuning code did. Add a small force as described above, compute a new parameter set via least square approximation and iterate until the number of correctly evaluated positions does not increase anymore.

This works, but it did not really yield better play of DT. It became clear that maximizing the agreement between DT's evaluation and the GM move choices and better play were not really the same thing. Also, DT searched 9+ plys and we could tune only on 3ply searches due to compute time limitations.

We observed that deeper searches in the tuning code lead to better results, even though it could be argued that evaluations should be orthogonal to searching. Perhaps an explanation for this effect is that deeper searches lead to more differences in the positions that are being related because they are more moves apart. Therefore, the tuning process collects more information on how individual components of the evaluation relate to each other. For example, the tuning process did result in a better understanding of the piece-values with respect to each others and as a function of the amount of material left on the board (this was used to control the transitions from opening, mid-game to end-game).

The next refinement was to make the force dependent on the amount of miss-evaluation. If DT is just a little bit off, use a small force, if DT misses the position in a big way, add more force. This relationship was subject to much debate and it is unclear which monotonic function is best suited. Hence, the tuning program gives a number of options from linear, quadratic, square-root, logarithmic, to reciprocal (the idea behind a reciprocal force function was this: if DT is just a little off then there is hope that it can learn how to evaluate this position correctly. If it is off by a lot, don't bother to try - it will only screw up things elsewhere because this is likely due to a concept that is missing from DT's evaluation function). Which force-function to use eventually depended on which parameters were subjected to tuning and became more of a trial and error procedure.

A somewhat different idea for a force function was to count how many moves were evaluated ahead of the GM-move. If this is 0, DT's evaluation function is on target. Numbers greater than 0 are undesirable and lead to an increased correcting force. This number was also used to compute a histogram to see how DT's evaluation function scores against GM moves (see the *.stat files after a multi-iteration tuning run). This is to be taken with a large grain of salt, because the 3ply searches are clearly not good enough to isolate positional evaluation from tactics.

At this point things started to improve somewhat (mostly measured via self-play tournaments starting from various seed positions). But also funny things happened to DT's evaluation function: the range of values that it would produce during a search became smaller. The evaluation function became less discriminative: the values for the good and bad moves were progressively moving closer together. It did match more GM moves and played slightly better, but it also became more erratic. Interestingly, this reduction in value range was not due to simply reducing the weight for the positional components of the evaluation function, rather it involved balancing the various components against each other.

So a compensating force was added to the correction force so that it would also encourage to keep a certain variance of evaluations. You see this in the code commented as variance compensation.

As we learned how to tune more effectively, it became clear that tuning all parameters at once was not necessarily a good idea. Certain parameters were used very infrequently in our set of GM games, so allowing these to be tuned can pick up the wrong idea for lack of sufficient data points. 868 games were not really enough to tune this many parameters.

It also became clear that the best parameter sets were usually obtained after 10-15 iterations, and before the maximum match to the GM games was reached, which typically required 20 to 30 iterations.

Finally, the last improvement of the tuning came after we added the ability to tune tables. In the DT evaluation function are a number of tables, for example the pawn advancement gradient, or the King centrality table. Instead of just making up a linear gradient, we allowed the tuning code to change the values of these table and allow more complex gradients. This resulted in some strange 3D curves that upon inspection by Murray were found to contain some known chess heuristics, which were not originally part of DT's evaluation function (for example: the pawn advancement gradient became more pronounced near the center and tapered off towards the promotion squares). However, the overall impact of table fitting was minor and was never fully exploited because the code became stable only near the end of DT's life and we did not have enough compute cycles to experiment a lot with it. The few experiments we did were great fun because we extracted some general rules out of the game database in an unbiased, neutral and fully automated way. This was quite important because we had to avoid the slightest hint of suspicion that we took anything from the competing Hitech effort, which was the officially funded chess project at CMU at that time. Because of our automated tuning process, we had a demonstratably independent and effective way to incorporate chess knowledge into DT.

Running the Code

I just compiled the tuning code on an Compaq/Alpha Unix workstation and it survived 12 years of bit-rot. So you may get it to run as well. Here are a few hints on how to play with it:

- The database is a binary file and the moves are stored in 2 byte fields. These may need to be swapped depending on the byte-order of your computer. Either enable or disable the "SUN_ENDIANESS" #define.

- When it runs, it needs a very wide terminal window to show the board and all Eval-parameters (142 columns).

- After starting the tuning program via 'texam dbf_all' you should see a small chess board and the set of parameters, their weights and contributions to the current position. At this point there are several single character commands available:

| WWW | ' ' | (space bar) steps through all legal moves |

|---|---|---|

| n | steps through the move played by the GM | |

| N | goes to the beginning of the next game | |

| g | goes to a specific game, the number of which is prompted | |

| f | does a parameter fit | |

| S/R | saves and restores a parameter set to/from a file (*.par) | |

| F | does a multi-iteration fit (a *.stat file will be generated along with parameter file after each iteration. The *.stat file | |

| q | exits the program |

- For the other commands (there are several more, you would need to look into the source code. In particular, there are ways to edit parameters manually and to control if they should participate in a tuning run or not)

- All of this assumes a Unix system with the curses library.

- There are 5 parameter files included in this directory. Q*.par were certain starting points during development and the other ones appeared to be the most recent ones. I don't know which parameter set was used in what DT game: I have hundreds of them and no idea which one was used for what. The USO.par file is probably the set that was used by DT in the 1988 US open tournament.

I would like to thank Feng H. Hsu and Murray Campbell for their permission to release this code. Also let me add a note of appreciation for the Computer Science Department at the Carnegie Mellon University, its open and free-spirited environment made the Deep Thought project possible. If you would like to know more about the roots of Chiptest and Deep Thought, look out for Feng Hsu's upcoming book on his Deep Blue experience [4] .

- Share and enjoy,

- -- A. Nowatzyk

- January 2000

Forum Posts

- Deep Thought's tuning code and eval function! by Severi Salminen, CCC, September 05, 2000

External Links

- Deep Thought at Tim Mann's Chess Pages

- Source code to tune Deep Thought's evaluation in tar.gz format.

- Andreas Nowatzyk's explanations of the the source code, January 2000

References

- ↑ Source code to tune Deep Thought's evaluation in tar.gz format

- ↑ Andreas Nowatzyk's explanations of the the source code, January 2000

- ↑ Deep Thought at Tim Mann's Chess Pages

- ↑ Feng-hsiung Hsu (2002). Behind Deep Blue: Building the Computer that Defeated the World Chess Champion. Princeton University Press