NeuroChess

NeuroChess,

an experimental program which learns to play chess from the final outcome of games written by Sebastian Thrun in the early mid 90s, at that time at Carnegie Mellon University supported by Tom Mitchell and in particular on the domain of chess advised by Hans Berliner [1] . NeuroChess took its search algorithm from GNU Chess but its evaluation was based on neural networks to integrate inductive neural network learning, temporal difference learning, and a variant of explanation-based learning [2] [3] .

Contents

Architecture

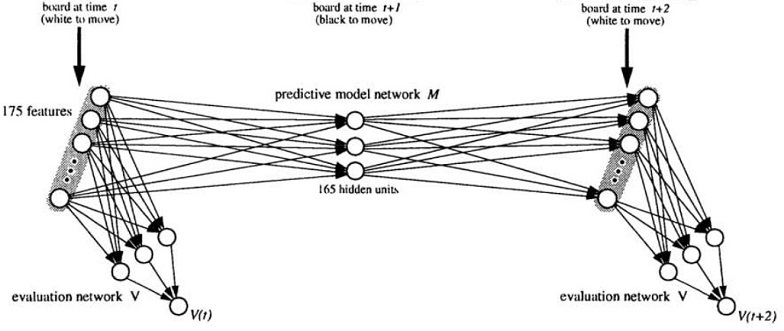

Using the raw board representaion as input representation of a neural network is a poor choice, since small changes on the board can cause huge differences in value contrasting the smooth nature of neural network representations. Therefore NeuroChess maps its internal board into a set of 175 carefully designed features. A specially trained, but otherwise conventional three layer feed forward neural network (V) with one final output maps the feature vector to an evaluation score.

The evaluation network V was trained by backpropagation and the temporal difference method (TD(0)), utilizing a second neural network, an explanation based neural network (EBNN) dubbed the chess model M, which maps 175 inputs via 165 hidden units to 175 outputs, and aims to predict the (important) board features two plies ahead. This network M is trained independently prior to V to incorporate supervised domain knowledge.

Learning an evaluation function in NeuroChess. Boards are mapped into a high-dimensional Feature vector, which forms the input for both the evaluation network V and the chess model M. The evaluation network is trained by Backpropagation and the TD(O) procedure. Both networks are employed for analyzing training example in order to derive target slopes for V. [4]

Conclusion

After training M with 120,000 grandmaster games, and training V with a further 2400 games, NeuroChess managed to beat GNU Chess in about 13% of the time at fixed depth 2 games, but only in 10% without EBNN. Further, computing a large neural network function took two orders of magnitude longer than evaluating the linear evaluation function of GNU Chess.

See also

Publications

- Sebastian Thrun (1995). Learning to Play the Game of Chess. in Gerald Tesauro, David S. Touretzky, Todd K. Leen (eds.) Advances in Neural Information Processing Systems 7, MIT Press, pdf

- Johannes Fürnkranz, Miroslav Kubat (eds.) (2001). Machines that Learn to Play Games. Advances in Computation: Theory and Practice, Vol. 8,. NOVA Science Publishers

- Jacek Mańdziuk (2007). Computational Intelligence in Mind Games. in Włodzisław Duch, Jacek Mańdziuk (eds.) Challenges for Computational Intelligence. Studies in Computational Intelligence, Vol. 63, Springer

- Marco Block, Maro Bader, Ernesto Tapia, Marte Ramírez, Ketill Gunnarsson, Erik Cuevas, Daniel Zaldivar, Raúl Rojas (2008). Using Reinforcement Learning in Chess Engines. Concibe Science 2008, Research in Computing Science: Special Issue in Electronics and Biomedical Engineering, Computer Science and Informatics, Vol. 35, pdf

- Jacek Mańdziuk (2010). Knowledge-Free and Learning-Based Methods in Intelligent Game Playing. Studies in Computational Intelligence, Vol. 276, Springer, pp. 124

- István Szita (2012). Reinforcement Learning in Games. in Marco Wiering, Martijn Van Otterlo (eds.). Reinforcement learning: State-of-the-art. Adaptation, Learning, and Optimization, Vol. 12, Springer

Forum Posts

- Re: Chess Evaluation by Srdja Matovic, CCC, June 11, 2011

- Sal or neurochess by ethan ara, CCC, September 06, 2011

External Links

- Sebastian Thrun’s NeuroChess from Machine Learning in Games by Jay Scott, September 2000

References

- ↑ Sebastian Thrun (1995). Learning to Play the Game of Chess. Acknowledgment

- ↑ Sebastian Thrun, Tom Mitchell (1993). Integrating Inductive Neural Network Learning and Explanation-Based Learning. IJCAI 1993, zipped ps

- ↑ Sebastian Thrun (1995). Explanation-Based Neural Network Learning - A Lifelong Learning Approach. Ph.D. thesis, University of Bonn

- ↑ Figure 2 from Sebastian Thrun (1995). Learning to Play the Game of Chess. pdf